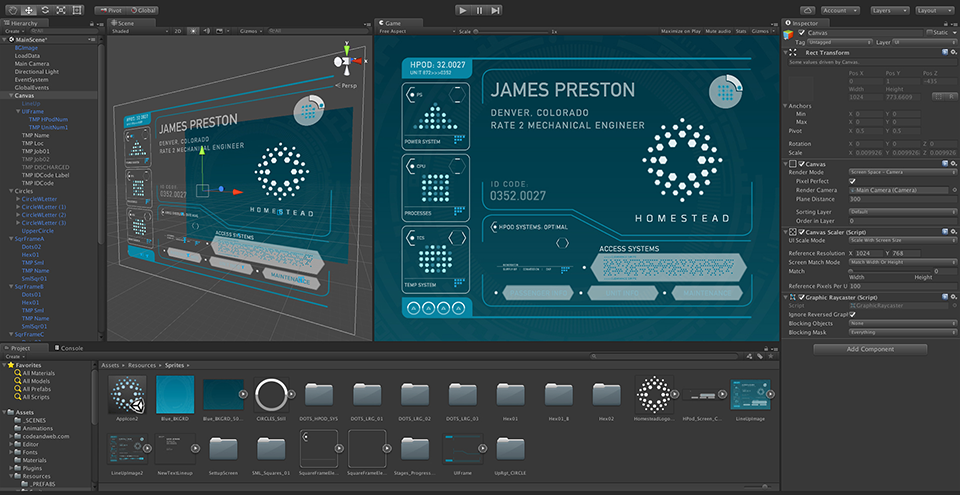

Several years ago, I created my own video playback software which has been heavily used on many of the movies I and my colleagues have worked on. While that software is still quite capable, as someone who has always been interested in new technologies, I’m always looking for new ways to push the boundaries on what we can do. Although I’ve been closely monitoring the progression of the Unity game engine since version one, it wasn’t until fairly recently that the feature set advanced to the point of being a viable platform upon which I could create new playback software. Between the plugins available on the Unity store as well as custom ones I’ve built myself, I’ve been excited to be able to create ways of bringing the interactivity of on-set graphics to a whole new level.

When I was asked to do Sony’s futuristic sci-fi movie, Passengers, it seemed like a great opportunity to put what I’d been doing in Unity through its paces. While great actors, such as Chris Pratt, can make nearly anything they do believable even if all they’re interacting with is a green screen, it is my opinion that the more realistic and interactive we can make the technology they’re performing with on set, the easier it is for them to stay fully immersed in their character’s world. Which is why it was a big deal to me to overcome the challenges we were presented with on Passengers’ cafeteria set. We had to seamlessly tie together the actions of a tablet that was floating on glass with the 4K TV directly behind it. Traditionally, a scene like this would have been triggered remotely which requires choreography of the movements of the actor’s hands and the order in which they press the buttons on screen. It wouldn’t work well to have the actor pressing the left side of the screen if I’m triggering a button on the right.

To eliminate the need for any graphics puppeteering or choreography, I decided to use OSC (Open Sound Control) to send network commands from the tablet to control the computer playing the graphic on the TV screen. That way when Chris would interact with the tablet on set the graphics on the larger monitor would react automatically and, instead of having to remember any kind of choreography, allow him to focus on his character’s predicament of having minimal access to the food dispenser.

In the hibernation bay, there were twelve pods containing four tablets per pod plus backups which meant more than fifty tablets that needed to display vital signs for whichever character was in the pod.

Since the extras were constantly shifting between pods, we had to have a way to quickly select the right name and information for that passenger. This was done by building a database of passenger information that could be accessed via a drop-down list on each tablet which let us reconfigure the room in just a few minutes.

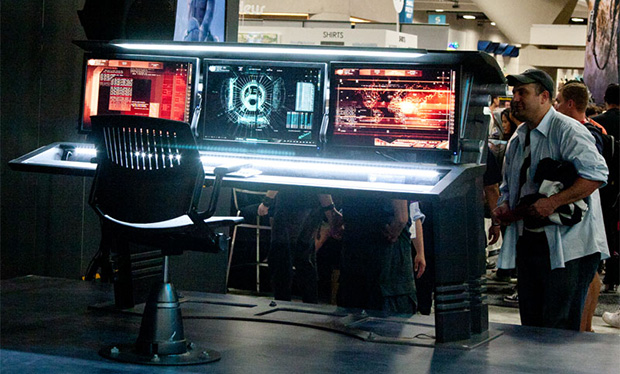

Since Passengers takes place in a high-tech spaceship, tablets were embedded in the walls throughout the corridors and rooms. Everything from door panels to elevator buttons and each room’s environmental controls were displayed on touch screens.

Because of how the set was constructed, many of the tablets were inaccessible once mounted in place. We’d start by loading the required content before the tablets were mounted but we also needed to have a way to make modifications through the device itself if changes were necessary.

The beauty of using a game engine is that it renders graphics in real time. Whenever color, text, sizing, positioning or speed needed to be changed, it could be done quickly and remotely either by using a game controller or interactively on the tablet itself. This kept us from causing the kind of delays in the shooting schedule that would have resulted if we’d had to rip devices out of the walls every time a change was made.

I learned a lot about Unity’s capabilities on this movie and I’m excited to continue exploring the boundaries of using game engines in ways they weren’t necessarily built for. This experience has allowed me to refine and expand upon what I thought was possible and I’m excited to use even more advanced versions of my playback software on future projects. That said, without amazing graphics to play back, the best software in the world still won’t get the job done. Chris Kieffer, Coplin LeBleu and Sal Palacios at Warner Bros. produce graphics that are second to none and I’m always grateful for the times I get to work alongside them.

To read more, here is a link to my article in Local 695 Production Sound & Video Magazine.

Passengers photos courtesy of Columbia Pictures